Gaussian Splatting: Rapid 3D with AI Tools

Thanks to recently-released AI tools, it is now possible to create 3D environments which look real, using only simple photos or videos of a given location. One of the most promising of these tools is called Gaussian Splatting. This offers a new means of quickly generating 3D content of a kind which used to take days or even weeks to produce, consequently lowering the technical bar to creating immersive 3D experiences.

Background

In the educational field, many of us are familiar with 360° video and its pedagogical applications. 360° video appeals due to the ease with which it can be created and viewed as well as the high degree of photorealism achievable, yet the method has one flaw: the user is “stuck” in one location, constrained to looking around the inside of a video sphere. Because of this limitation, many creators have opted for VR which allows both interactivity and full freedom of movement. VR, however, most often looks like a video game and photorealism falls by the wayside.

What if it was possible to combine the photorealistic effect of 360° video with the freedom of movement offered by true VR? In other words, what if we could make a navigable, 3D environment that looks real and is easy to create? Thanks to Gaussian Splatting, this just might be possible!

First published at SIGGRAPH 2023 –a leading conference centred upon computer graphics and interactive techniques – Gaussian Splatting is a novel machine learning approach that allows us to build navigable 3D environments using simple photos or videos. The images are first processed to determine the geometry of the scene before the program calculates where in the space each picture belongs and creates a point cloud to build a 3D mesh. Next, the AI training begins and the point cloud has textures mapped onto it through an iterative process. The model attempts to find the most accurate colour for each point, refining it many thousands of times, and then blends each point together with its neighbours. The result is a smooth, continuous surface with a high degree of photorealism.

Compared to the similar technologies which came before, Gaussian Splatting offers much faster training times to produce its final result. Moreover, it is more photo-accurate. These qualities are an important part of any media project, especially those as large in scope as many educational projects are. Consider, for instance, an archaeology project that seeks to capture in 3D the environments of many sites around the world. In this case, the speed and accuracy of Gaussian Splatting provides a clear advantage over previous technologies.

Building a Scene

In order to train a model, we need some data! Or in this case, some video. Capturing video to create a 3D model is not a difficult process but it should be a comprehensive one. Walking around a given scene, the camera operator should strive for as much coverage as possible. This means not only shooting in all relevant directions, but also at different distances from objects at several different heights. At LLInC, we deployed our trusty 360° camera, enabling us toshoot in all directions as quickly as possible. We then exported four “framed” videos, or perspectives from within the 360° sphere.

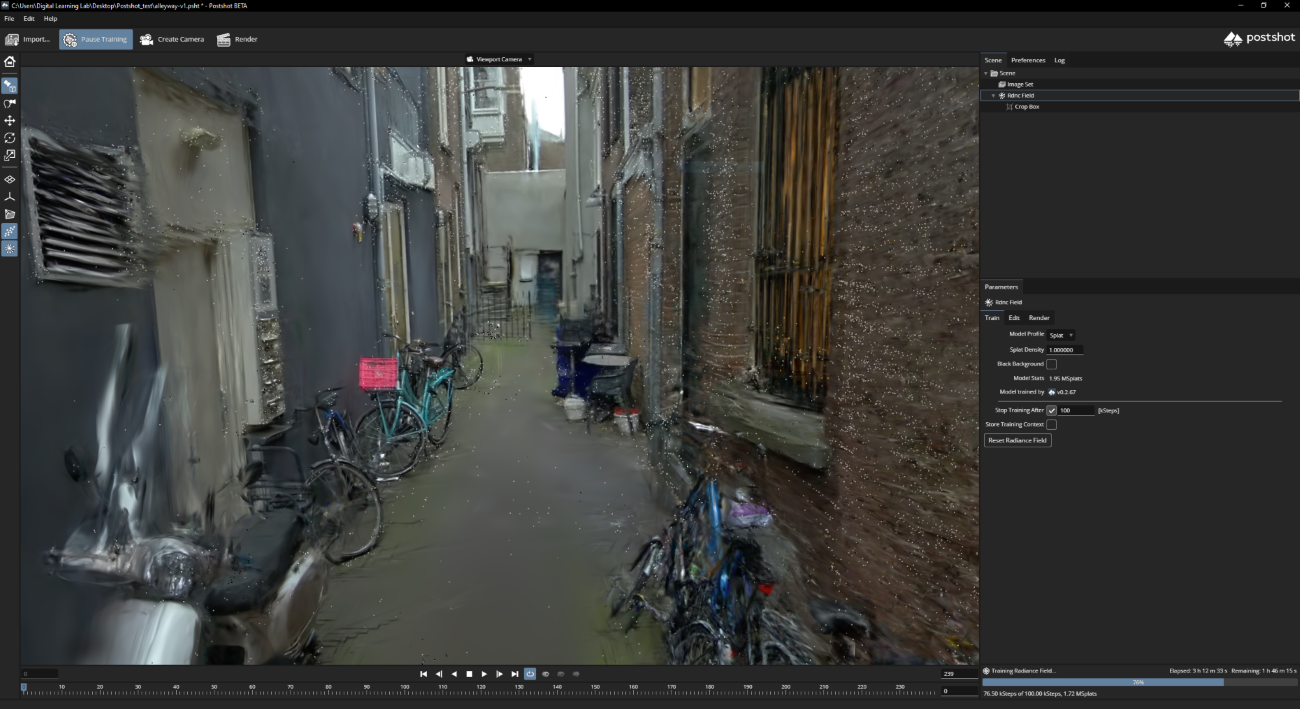

Once the video is ready, users can choose whether to train their models using a cloud service or on a local computer. At LLInC, we have (thus far) opted for the latter, using the desktop program Postshot to train on our own hardware. This we chose since local training affords much more granular control and yields better results than cloud options. The fact that the cloud options are currently commercial software was another significant consideration. This being said, the cloud route is, however, currently more user friendly since it can be used entirely on a smartphone and hosted on the web. From our research thus far, it appears that Luma.ai and Polycam are currently the strongest options on the cloud.

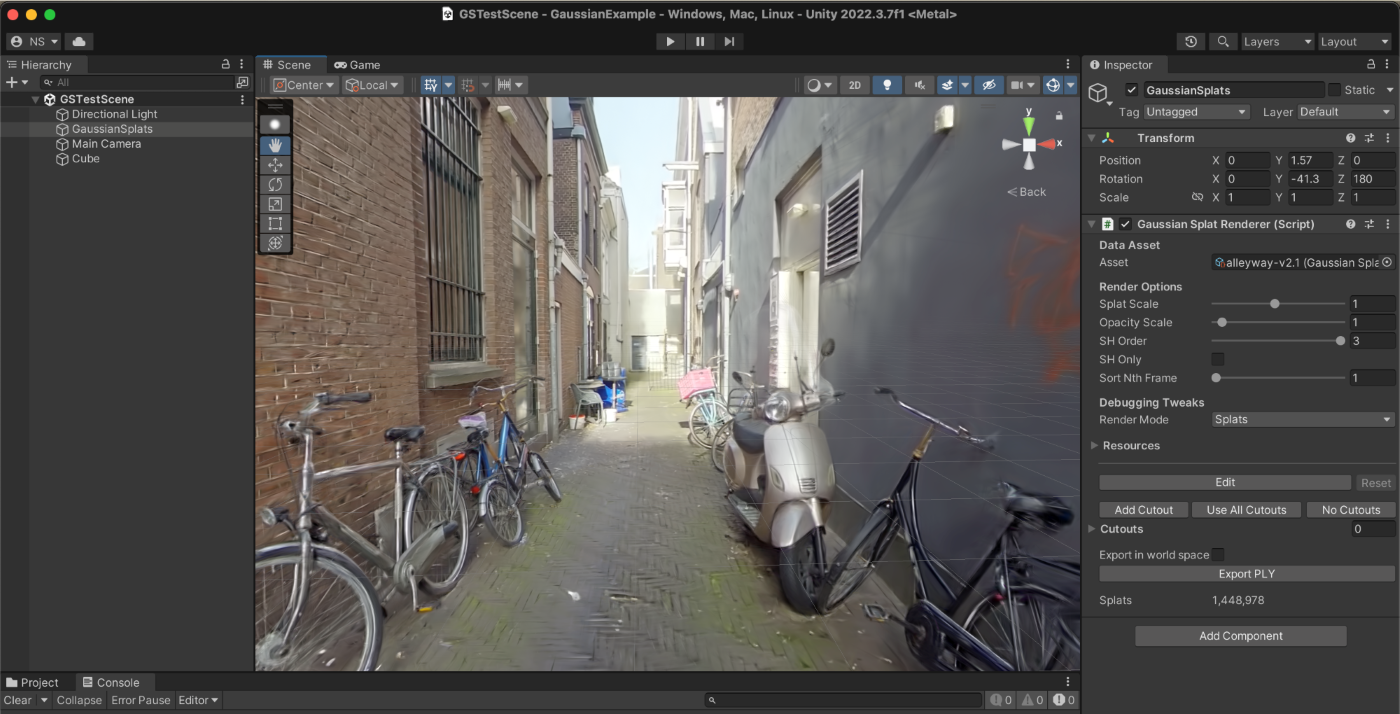

Training times will depend on the complexity and size of the scene, as well as the power of the computer used to train the model. The alleyway near our LLInCoffice in the Hague required around eight hours of training. The result was rather impressive and we were easily able to export the final 3D model for use in Unity– our game development software of choice. After making the scene navigable with Unity, this was able to be exported to a VR headset for further exploration.

Use in Education

This technology has many promising applications for us at LLInC, as well as more broadly within the field of immersive education. We are already working on a pilot project with the Law Faculty in which elements of an urban scene can be modified to test the public’s response to planning interventions. But the sky is the limit here. Any application in which a 3D environment is desirable is now much easier to create than before. As Gaussian Splatting becomes widespread, the tools to deploy it will likewise become easier to use.

Behind the scenes, AI models are changing production pipelines in numerous fields. Media production for education is no exception. At LLInC, our Data and Media team will be keeping a close eye on the results of this year’s SIGGRAPH conference, where radiance fields (the group to which Gaussian Splatting belongs) now make up four distinct categories. Stay tuned!

Useful Links

https://github.com/aras-p/UnityGaussianSplatting (Plugin for Unity)

https://lumalabs.ai/interactive-scenes

https://lumalabs.ai/luma-web-library

https://poly.cam/tools/gaussian-splatting

https://radiancefields.com (further reading)

Credit to Nathan Saucier for all pictures.