The possibilities and problems of AI and its detection in education

The rise of Artificial Intelligence (AI) and its rapid development since the release of ChatGPT has both excited and worried people in the realm of education and outside. Understandably so, as such powerful tools are likely to lead us down a path full of question marks. Yet, like any new and disruptive technology, Large Language Models (LLMs) like GPT offer both opportunities and risks. Therefore, it is a responsibility that we should all take upon ourselves to be as informed as possible, while maintaining an open and constructive yet critical attitude towards it.

Within the educational community a lot of discussion arose on the topic of detection. Can it be an effective tool against fraudulent use of LLMs like GPT? Is the use of it even fraudulent in the first place? Or should we allow or even encourage the use of GPT and the likes? By focusing on detection, we can try to give some insight in several topics around the use of LLMs in education. In this article we’ll explore what’s possible in AI detection and what isn’t, and what the challenges are.

Detection tools are only as good as the tool they try to detect

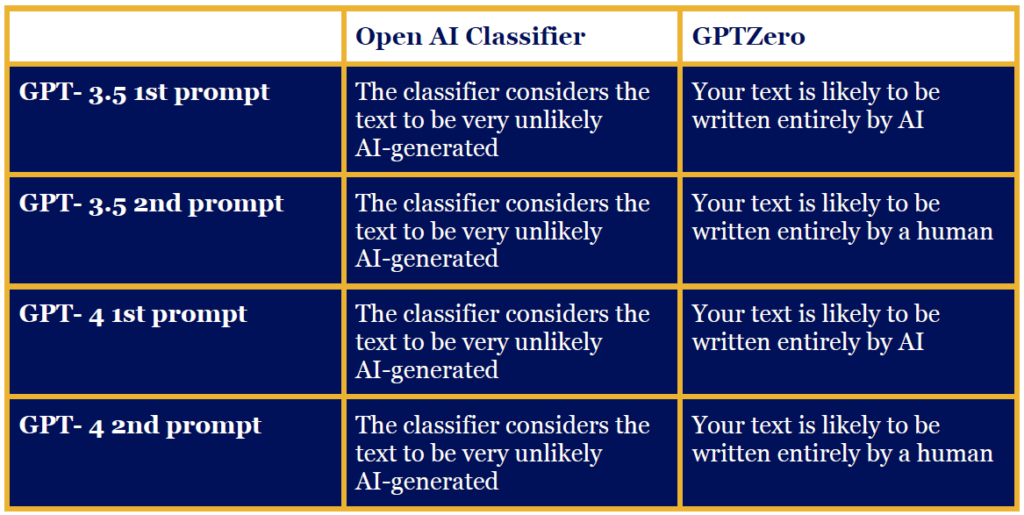

Let’s start off with stating what’s probably the biggest issue with AI detection: these tools are usually based on the very same kind of models they try to detect. OpenAI’s detection tools are, at best, only as good as the tool they are trying to detect; for example, their GPT model runs as well as ChatGPT – the tool whose usage they are trying to detect. In theory, this means that detection tools are, at best, only as good as the tool they are trying to detect. Unfortunately, in practice this means they’re always behind. To illustrate, here are some examples of how two tools perform against the applications they try to detect:

1st prompt: “Write an essay on the history of Azerbaijan”

2nd prompt: “Now rewrite it less in the style of an AI”

The assignment that was given to ChatGPT was to “write an essay on the history of Azerbaijan”. Now it did this quite well and accurately, but most detectors were able to detect the application of AI within the written content. However, if we rephrase the question to ChatGPT, by asking if it can rewrite the essay, but “less in the style of an AI”none of the detectors seem to have enough confidence to state that this is written by an AI. ANote also that the AI detector from OpenAI, who built ChatGPT, seems to be the least effective at detecting its own model (it failed every time). GPTZero did show some sentences that it believed could be written by an AI, but such an outcome would clearly be an incredibly thin basis to confront a student. Something OpenAI also acknowledges themselves as well. In a blog post about their own classifier they cite some limitations while also noting that “it should not be used as a primary decision-making tool, but instead as a complement to other methods of determining the source of a piece of text.” GPTZero, created by a 22-year old student at Princeton University, does seem to perform better than OpenAI’s own tool, but as seen above, it still seems to have the same limitations. Detection tools probably will improve in the future, but so will the models they try to detect. It’s likely to become an arms race with no end, with the detector most often running helplessly behind the model.

Detection in education

There are other issues with detection too. How do you confront a student with the “evidence” that you have and what “evidence” do you really have? AI’s are black boxes, as a user we don’t really get to see how a certain output was produced based on the given input. This also goes for AI detectors. The detector might say what it thinks is written by an AI, even down to a per sentence basis, but (unlike regular plagiarism detectors) it isn’t able to really prove why this is the case or tell how it exactly came to this conclusion. This means that the outcome will always remain an assumption, sometimes perhaps stronger than others, but an assumption nonetheless – which makes for a very weak case. The outcome of the tools could of course still be used for an open discussion on the topic of AI usage among students, as they too should be part of the discussion around AI’s and its ethics in education.

These things combined beg the question if detection is even worth applying at all. While we have no definitive answer on that and AI detectors might improve in ways we don’t foresee yet, for now it seems better to focus on other ways of handling AI and LLMs in education. Different approaches are being explored and a lot is yet to be learned. One can think of simple solutions, from good old classroom assignments on pen and paper (although they come with lots of labor for the teacher) to creating assignments where students are purposely asked to use AI, or given an assignment that already contains an AI generated output based on the question involved, to then reflect on the topic.

A shift from detection to engagement

Another possibility is to encourage the use of AI’s in certain cases. With the right mindset (which needs to be taught to students) a tool like ChatGPT could be a learning tool in and of itself. Imagine a grammar course in a foreign language. One could ask ChatGPT why a sentence is constructed the way it is, explain every part and even suggest variations. The catch here is: the answer might be false or even downright absurd. When students are aware of this, GPT isn’t a tool whose outcome you’ll blindly take as true, it then becomes a sparring partner. A student could confront GPT with the false answer, ask it to reconsider it or suggest what the student thinks is right. This way it might help the student to stay aware and critical on the teaching material.

One could probably think of quite a few other use cases for ChatGPT to enhance education and teachers should be encouraged to explore its possibilities. Rather than focusing on detection (which is difficult if not impossible), it might be more fruitful to focus on the ethics around the use of LLMs in education and science. Of course, there are still many unanswered questions on the ethical side and much debate to be had. We at LLInC are happy to be part of this process and to explore the possibilities that AI can offer in education.